When thinking about successful data scraping, most people focus on proxies, scraping tools, and evading bot detection. Yet one crucial factor often overlooked is the structure of the website itself. Poorly structured or dynamically loaded websites can silently ruin scraping projects before they even start.

In this article, we dive into how different website structures impact data scraping outcomes—backed by validated data—and what you should look for when planning your next large-scale scraping operation.

The Growing Complexity of Web Architecture

Modern websites are increasingly built with dynamic elements using JavaScript frameworks like React, Angular, and Vue.js. According to a W3Techs survey, as of early 2024, over 22% of websites use some form of client-side rendering technology.

While these technologies create smoother user experiences, they complicate scraping. Traditional scrapers that simply parse HTML often fail to retrieve data embedded in JavaScript-rendered elements. This increases the need for headless browsers or specialized rendering engines, making scraping slower and costlier.

A study by ScrapeOps found that scraping fully client-rendered pages costs up to 5x more in resources compared to static HTML pages.

The Hidden Cost of Poorly Structured Data

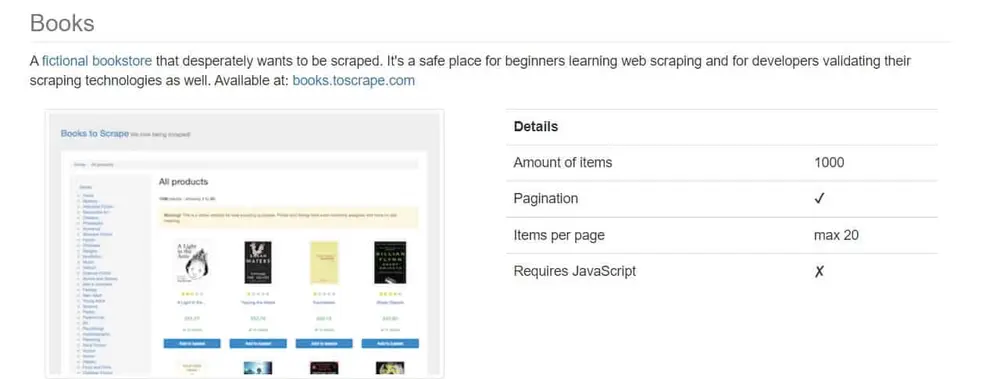

Beyond technical frameworks, the logical structure of a website plays a major role. Websites without consistent tagging, predictable URL structures, or semantic HTML can drastically increase scraping errors.

BuiltWith’s data shows that websites adhering to structured data standards (like Schema.org) have a 37% higher success rate for accurate scraping compared to those that don’t.

For instance, scraping an e-commerce site that uses uniform product containers is straightforward. In contrast, scraping a marketplace where each product listing varies wildly in format can require creating multiple customized scraping rules, which inflates development and maintenance costs.

Why Reliable Proxies Are Non-Negotiable

Even if a website is technically easy to scrape, another barrier remains: IP blocking. A report highlighted that over 45% of popular websites use IP-based rate limiting to prevent aggressive crawling.

This is where using the best residential proxy service becomes critical. Residential proxies, because they route traffic through real devices and ISPs, reduce the chance of getting flagged or blocked compared to datacenter proxies.

Choosing a reliable provider ensures that your scraper appears as natural human traffic, not an obvious bot network.

Key Characteristics of Scraper-Friendly Websites

Based on aggregated analysis by Ping Proxies, the websites that are easiest and most cost-effective to scrape usually share these traits:

- Consistent and semantic HTML structure

- Minimal or optional JavaScript rendering

- Logical, paginated URL patterns

- Use of open structured data formats (like JSON-LD)

- Low to moderate scraping defense mechanisms

If you’re prospecting new targets, evaluating them based on these criteria can save significant time and scraping resources.

Final Thoughts

The technical makeup of a website is just as important as your scraping infrastructure. Investing time in choosing scraping-friendly targets — and supporting them with tools like the best residential proxy solution — can dramatically improve data quality, speed, and project ROI.

In short, data scraping is not just a battle against detection. It’s a strategic selection game. Know your enemy’s structure first, and you’ve already won half the fight.

Read more: How SEO Companies Stay One Step Ahead of Google’s Algorithms

Contact US | ThimPress:

Website: https://thimpress.com/

Fanpage: https://www.facebook.com/ThimPress

YouTube: https://www.youtube.com/c/ThimPressDesign

Twitter (X): https://x.com/thimpress_com