In order for your website to be indexed quickly, optimized for SEO, and improve rankings on the SERP search results page, first things first, you need to build a technical robots.txt file for WordPress.

The Robots.txt file illustrates how to scan and configure your index site, making it an extremely powerful SEO tool. Hence, we’ll suggest a complete guide to enhancing the WordPress Robots.txt for SEO in this article.

What is WordPress Robots.txt?

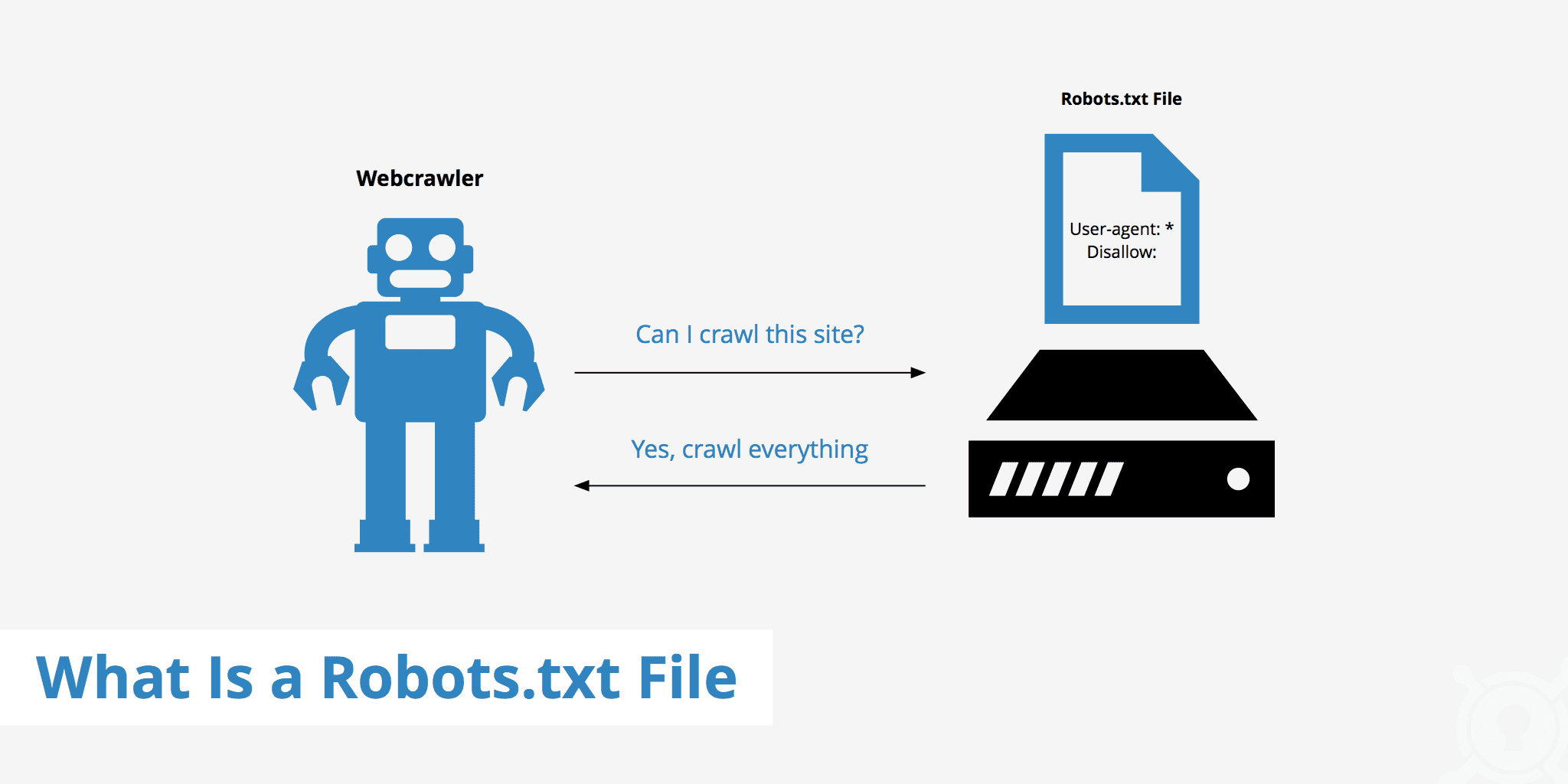

This is the text file in a website’s root folder and provides instructions to the search engine for which pages can be indexed.

If you have discovered the search engine’s working process previously, you will know that during the crawling and indexing phases, web browsers attempt to find publicly available pages on the web, which they can include in their index.

The first thing a web browser does when it visits a website is to find and check the contents of the robots.txt for the WordPress file. Depending on the rules specified in the file, they generate a list of URLs that can be crawled and then specifically indexed for the site.

Why do you have to construct a WordPress robots.txt file?

There are many cases where search engine bots “scanning” your website are prevented or restricted:

The Content Is Void And Duplicated

In fact, your website displays a lot of other information, such as system setup files, WordPress plugins, etc.

This information is not valuable to the user. Moreover, there are several situations where the content of the website is duplicated. If the content is still indexed, it will dilute the website, reducing the actual content quality of the website.

Subpages For Setting Up And Testing The Website

In this case, maybe when creating a new website with WordPress, you have not completed the process of designing and setting up the website, which is generally not ready for the user. You need to take measures to prevent search engine bots from “scanning” and validating your website.

Not only that, but some websites also have many subpages that are only used to test website features and design, allowing users to access such sites will affect the quality of the website and the professionalism of your company.

The Large-Capacity Website Takes A Long Loading Time

Each search engine bot only has a limited “scan” capability per website visit. When your website has a large amount of content, bots will have to take more time to analyze it because if it has operated enough for one visit, the remaining content on the website must wait until the next time bots spin so it can be crawled and indexed again.

If your website still has unnecessary files and content but is indexed first, it will not only reduce the quality of the website but also spend more time indexing the bots.

Reduces Web Speed When Constantly Indexing

When there is no robots.txt file, bots will still scan the entire content of your website. In addition to showing content that your customers don’t want to see, constant crawling and indexing can also slow the loading rate of the page.

Web speed is a significantly vital aspect of the website, influencing the quality and user experience when they visit it. The page is also higher.

For these reasons, you should build this kind of technical file for WordPress to instruct bots: “Bots scan one side, don’t scan the other!”. The use of WordPress’s standard robots.txt file helps increase the efficiency of bots’ website crawls and indexes. From there, improve SEO results for your website.

Is It Necessary To Have This File For Your WordPress Website?

If you’re not using a site map, Google will still crawl and rank your website. The search engines cannot, however, say which pages or folders they should not run.

When you start a blog, that doesn’t matter much. However, you can want more control over how your site is rippled and indexed as your site grows and you have lots of content.

The search bot has a crawl quota per website. This means that they crawl certain pages during a crawl session. If they have not finished all pages on your website, they will come back and crawl again in the next session. They are still there and do not disappear.

This may reduce the indexing speed of your website. But you can fix this by disabling search bots from trying to crawl unnecessary pages like the wp-admin admin page, plugin directory, and theme directory.

By rejecting unnecessary pages, you can save on your crawl quota. This enables search engines to more quickly index the pages of your website.

Another good reason to use robots.txt files is to prevent search engines from indexing posts or pages. This is not the safest way to hide search engine content, but it helps prevent search results.

The Perfect Guideline To Optimize Robots.txt For SEO Content

Many blog sites choose to run a very modest robots.txt file on their WordPress web. Their content can vary, depending on the needs of the particular website:

User-agent: *

Disallow:

Sitemap: http://www.example.com/post-sitemap.xml

Sitemap: http://www.example.com/page-sitemap.xml

This robots.txt file gives all bots a connection to the XML sitemap to indicate all content.

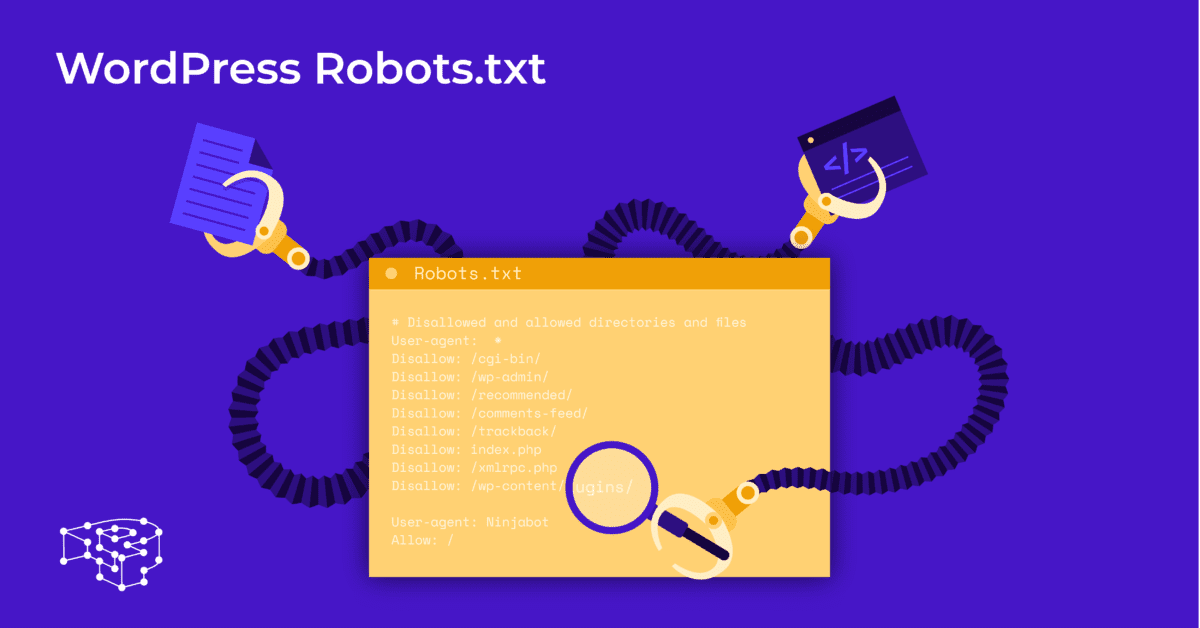

We recommend the following guidelines for some useful files for WordPress websites:

User-Agent: *

Allow: /wp-content/uploads/

Disallow: /wp-content/plugins/

Disallow: /wp-admin/

Disallow: /readme.html

Disallow: /refer/

Sitemap: http://www.example.com/post-sitemap.xml

Sitemap: http://www.example.com/page-sitemap.xml

All pictures and files on WordPress are indexed. Search bots could even index plugin files, the admin area, readme files, and affiliate links.

You can also easily let Google Bots find all pages on your website by adding a map to the robots.txt file.

Creating A WordPress Robots.txt File For Your Website

Create Robots.txt File Using Notepad

Notepad is a minimal text editor from Microsoft. It’s for writing code for Pascal, C++, HTML programming languages, etc.

A text file, ASCII or UTF-8, saved properly on the website source file by the name “robots.txt,” is required for WordPress robots txt. Each file contains many rules, and each rule is on one line.

You can generate a new Notepad file, save it as robots.txt, and add the rules as instructed above.

After that, uploading the file for WordPress to the public_html directory is complete.

Create a robots.txt file using the Yoast SEO Plugin

Yoast SEO is among the best-rated plugins to assist you in optimizing your website’s SEO in terms of content. However, Yoast SEO can also be seen as a robots.txt WordPress plugin that helps you create an innovative file for the optimization of your sites.

First, you go to Dashboard.

In Dashboard => Select SEO => Select Tools or Dashboard => Select Tools => Select Yoast SEO (for other WordPress versions/themes).

In the Yoast SEO admin page => Choose File Editor.

Select Create New to initiate the file for WordPress or edit an existing file.

Select Save changes to robots.txt to confirm the custom robots.txt file is complete.

Visit the website again, and you will see the new rules you have just installed.

Check The Robots.txt File On Google Search Console

You can log in to Google Search Console and register your website property to start.

Select Go to old version to return to the old interface and enable usage.

Under Crawl => Select robots.txt Tester => Enter Installed Rules => Click Submit.

Check the result of the number of Errors and Warnings => Perform corrections if any.

Select Download updated code to download the new robots.txt and re-upload the new file to the original directory, or select Ask Google to Update to automatically update.

Final Thoughts

Through this article, you have learned the importance of the way to set up a robots.txt file for WordPress. Owning a standard robots.txt file will help your website and search engine bots interact better, so the site’s information will be updated accurately and increase the ability to reach more users.

Let’s start with creating your own technical file for WordPress and improving the website’s SEO right away. Moreover, on our website, you can find several helpful SEO optimization tools that we suggest you utilize!

Read More: 10 Best Free WP Backup Plugins for Protecting WordPress

Contact US | ThimPress:

Website: https://thimpress.com/

Fanpage: https://www.facebook.com/ThimPress

YouTube: https://www.youtube.com/c/ThimPressDesign

Twitter (X): https://twitter.com/thimpress